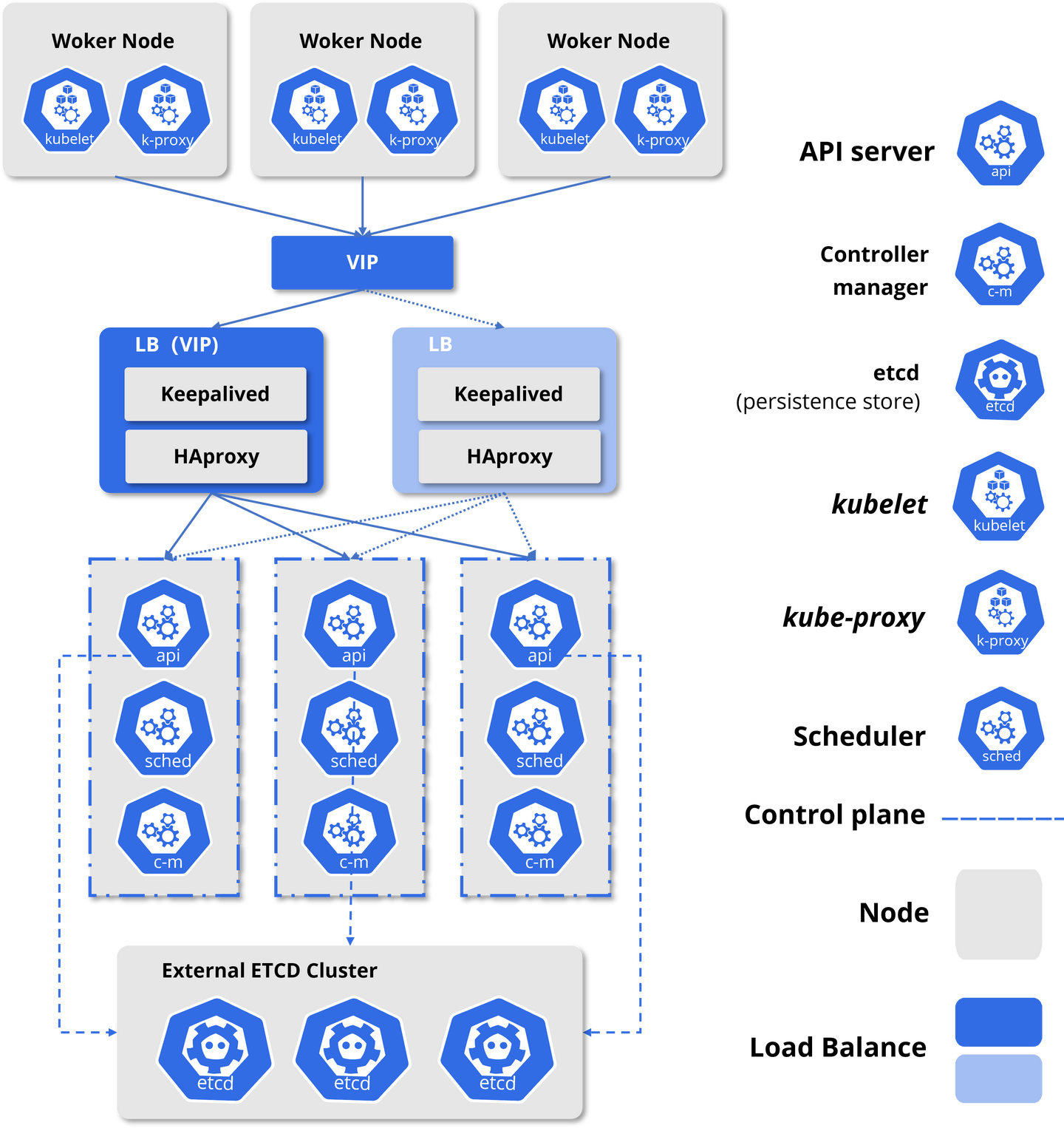

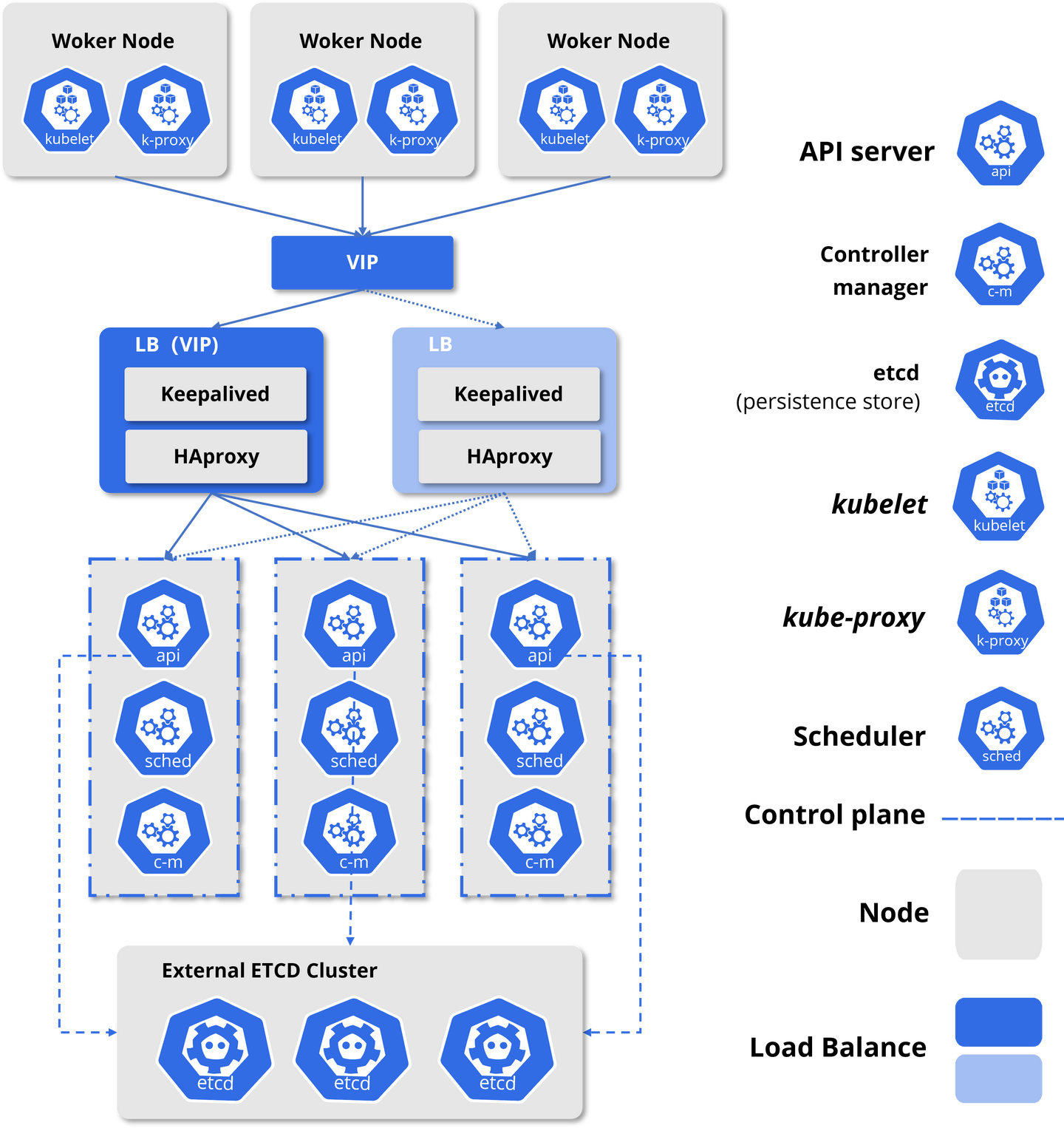

Architecture

Environment

| Cluster Role | IP Address | Component | Hostname |

|---|

| Master-01 | 192.168.3.11 | kube-apiserver kube-controller-manager kube-scheduler ansible | K8snode1 |

| Master-02 | 192.168.3.12 | kube-apiserver kube-controller-manager kube-scheduler | K8snode2 |

| Worker-01 | 192.168.3.13 | Kubelet kube-proxy etcd | K8snode3 |

| Worker-02 | 192.168.3.14 | Kubelet kube-proxy etcd keepalived haproxy | K8snode4 |

| Worker-03 | 192.168.3.15 | Kubelet kube-proxy etcd keepalived haproxy | K8snode5 |

Setup

env prepare on deploy server (k8snode1)

1

2

3

4

5

6

7

8

|

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

192.168.3.[11:15]

[root@node1 ~]

[root@node1 ~]

|

Kubeasz setup k8s

https://github.com/easzlab/kubeasz

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

|

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[etcd]

192.168.3.[13:15]

[kube_master]

192.168.3.11 k8s_nodename='master-01'

192.168.3.12 k8s_nodename='master-02'

[kube_node]

192.168.3.13 k8s_nodename='worker-01'

192.168.3.14 k8s_nodename='worker-02'

192.168.3.15 k8s_nodename='worker-03'

[harbor]

[ex_lb]

192.168.3.15 LB_ROLE=backup EX_APISERVER_VIP=192.168.3.250 EX_APISERVER_PORT=8443

192.168.3.14 LB_ROLE=master EX_APISERVER_VIP=192.168.3.250 EX_APISERVER_PORT=8443

[chrony]

192.168.3.11

[all:vars]

SECURE_PORT="6443"

CONTAINER_RUNTIME="containerd"

CLUSTER_NETWORK="calico"

PROXY_MODE="ipvs"

SERVICE_CIDR="10.10.0.0/16"

CLUSTER_CIDR="192.10.0.0/16"

NODE_PORT_RANGE="30000-32767"

CLUSTER_DNS_DOMAIN="cluster.k8slab"

bin_dir="/opt/kube/bin"

base_dir="/etc/kubeasz"

cluster_dir="{{ base_dir }}/clusters/k8slab"

ca_dir="/etc/kubernetes/ssl"

k8s_nodename=''

ansible_python_interpreter=/usr/bin/python

[root@node1 ~]

INSTALL_SOURCE: "online"

OS_HARDEN: true

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

CHANGE_CA: false

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

K8S_VER: "1.28.1"

K8S_NODENAME: "{%- if k8s_nodename != '' -%} \

{{ k8s_nodename|replace('_', '-')|lower }} \

{%- else -%} \

{{ inventory_hostname }} \

{%- endif -%}"

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

ENABLE_MIRROR_REGISTRY: true

INSECURE_REG:

- "http://easzlab.io.local:5000"

- "https://{{ HARBOR_REGISTRY }}"

SANDBOX_IMAGE: "easzlab.io.local:5000/easzlab/pause:3.9"

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

DOCKER_STORAGE_DIR: "/var/lib/docker"

DOCKER_ENABLE_REMOTE_API: false

MASTER_CERT_HOSTS:

- "192.168.3.11"

- "192.168.3.12"

- "192.168.3.250"

- "k8s.easzlab.io"

NODE_CIDR_LEN: 24

KUBELET_ROOT_DIR: "/var/lib/kubelet"

MAX_PODS: 110

KUBE_RESERVED_ENABLED: "no"

SYS_RESERVED_ENABLED: "no"

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

flannel_ver: "v0.22.2"

CALICO_IPV4POOL_IPIP: "Always"

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

CALICO_NETWORKING_BACKEND: "bird"

CALICO_RR_ENABLED: false

CALICO_RR_NODES: []

calico_ver: "v3.24.6"

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

cilium_ver: "1.13.6"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

kube_ovn_ver: "v1.11.5"

OVERLAY_TYPE: "full"

FIREWALL_ENABLE: true

kube_router_ver: "v1.5.4"

dns_install: "yes"

corednsVer: "1.11.1"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "1.22.23"

LOCAL_DNS_CACHE: "169.254.20.10"

metricsserver_install: "yes"

metricsVer: "v0.6.4"

dashboard_install: "yes"

dashboardVer: "v2.7.0"

dashboardMetricsScraperVer: "v1.0.8"

prom_install: "yes"

prom_namespace: "monitor"

prom_chart_ver: "45.23.0"

kubeapps_install: "no"

kubeapps_install_namespace: "kubeapps"

kubeapps_working_namespace: "default"

kubeapps_storage_class: "local-path"

kubeapps_chart_ver: "12.4.3"

local_path_provisioner_install: "no"

local_path_provisioner_ver: "v0.0.24"

local_path_provisioner_dir: "/opt/local-path-provisioner"

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

network_check_enabled: true

network_check_schedule: "*/5 * * * *"

HARBOR_VER: "v2.6.4"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_PATH: /var/data

HARBOR_TLS_PORT: 8443

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"

HARBOR_SELF_SIGNED_CERT: true

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CHARTMUSEUM: true

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

[root@node1 ~]

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-01 Ready,SchedulingDisabled master 19m v1.28.1 192.168.3.11 <none> CentOS Linux 7 (Core) 3.10.0-1160.71.1.el7.x86_64 containerd://1.6.23

master-02 Ready,SchedulingDisabled master 19m v1.28.1 192.168.3.12 <none> CentOS Linux 7 (Core) 3.10.0-1160.71.1.el7.x86_64 containerd://1.6.23

worker-01 Ready node 17m v1.28.1 192.168.3.13 <none> CentOS Linux 7 (Core) 3.10.0-1160.71.1.el7.x86_64 containerd://1.6.23

worker-02 Ready node 17m v1.28.1 192.168.3.14 <none> CentOS Linux 7 (Core) 3.10.0-1160.71.1.el7.x86_64 containerd://1.6.23

worker-03 Ready node 17m v1.28.1 192.168.3.15 <none> CentOS Linux 7 (Core) 3.10.0-1160.71.1.el7.x86_64 containerd://1.6.23

[root@node1 ~]

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-86b55cf789-6l69w 1/1 Running 0 17m

calico-node-2tlph 1/1 Running 0 17m

calico-node-br4qf 1/1 Running 0 17m

calico-node-bxnpg 1/1 Running 0 17m

calico-node-r74ns 1/1 Running 0 17m

calico-node-tzcm9 1/1 Running 0 17m

coredns-7bc88ddb8b-dtk4t 1/1 Running 0 16m

dashboard-metrics-scraper-77b667b99d-gs6mt 1/1 Running 0 16m

kubernetes-dashboard-74fb9f77fb-cd6pc 1/1 Running 0 16m

metrics-server-dfb478476-q62nr 1/1 Running 0 16m

node-local-dns-chmpp 1/1 Running 0 16m

node-local-dns-cphrq 1/1 Running 0 16m

node-local-dns-wmp4f 1/1 Running 0 16m

node-local-dns-z8hx7 1/1 Running 0 16m

node-local-dns-zbnv8 1/1 Running 0 16m

|

Live demo

K8s dashboard